The Hidden Crisis Behind AI’s Broken Promises

The AI revolution is growing rapidly, but it’s facing a significant roadblock. The issue isn’t about computing power or algorithmic breakthroughs, but rather something more fundamental: uncontrollable, unreliable data. While Silicon Valley celebrates the latest AI agents that can book dinner reservations or write emails, company managers are quietly drawing back their multimillion-dollar AI initiatives. The reason? They’ve discovered the fatal mistake: AI based on bad data produces nothing but mediocre results.

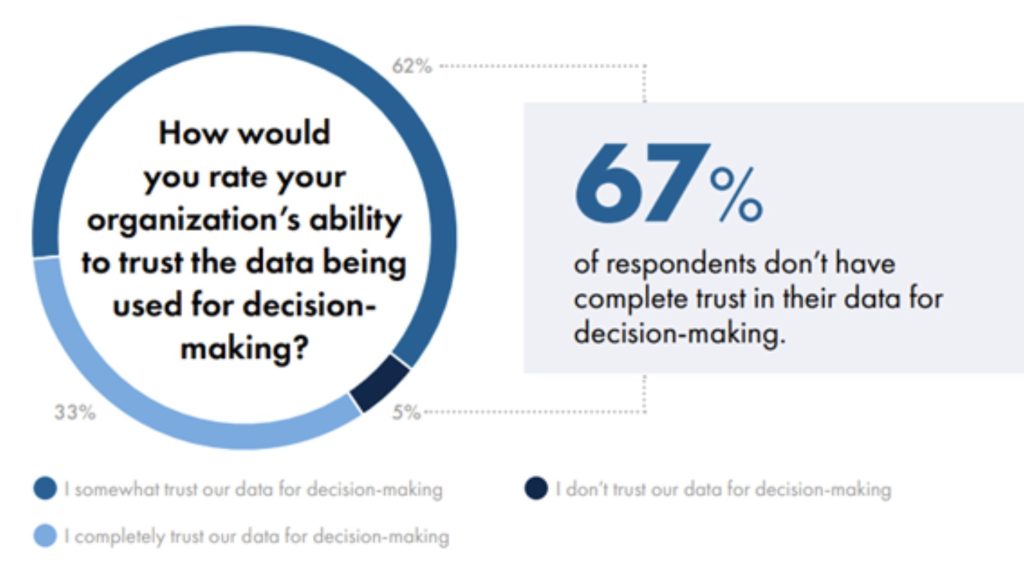

The numbers don’t lie. According to a study by NTT Data, 70-85% of generative AI deployments don’t meet their expected returns on investment. Furthermore, 67% of organizations say they don’t fully trust the data used for decision-making. This isn’t a technical glitch – it’s a fundamental design error in creating AI systems. As Gartner’s prediction suggests, over 40% of AI projects will be canceled by 2027, and the unpleasant truth is that much of today’s AI is based on illusion. These systems don’t learn in a meaningful sense; they only guess by using mountains of unchecked, biased, or complete garbage data.

Why Current AI Agents Are Flying Blind

The problem isn’t that AI agents are naturally faulty, but rather that they’ve been fed corrupt data. Traditional centralized systems have created this “Black Box Dilemma”: inputs and outputs are visible, but the process between them is a minefield of bias, manipulation, and decay. Consider a real-world catastrophe: an AI agent trained on market data recommends investments, but what if the data includes manipulated reports, outdated financial data, or biased research? What does it lead to? AI not only fails; it fails with confidence and leaves companies with the error without ways to pursue or correct it.

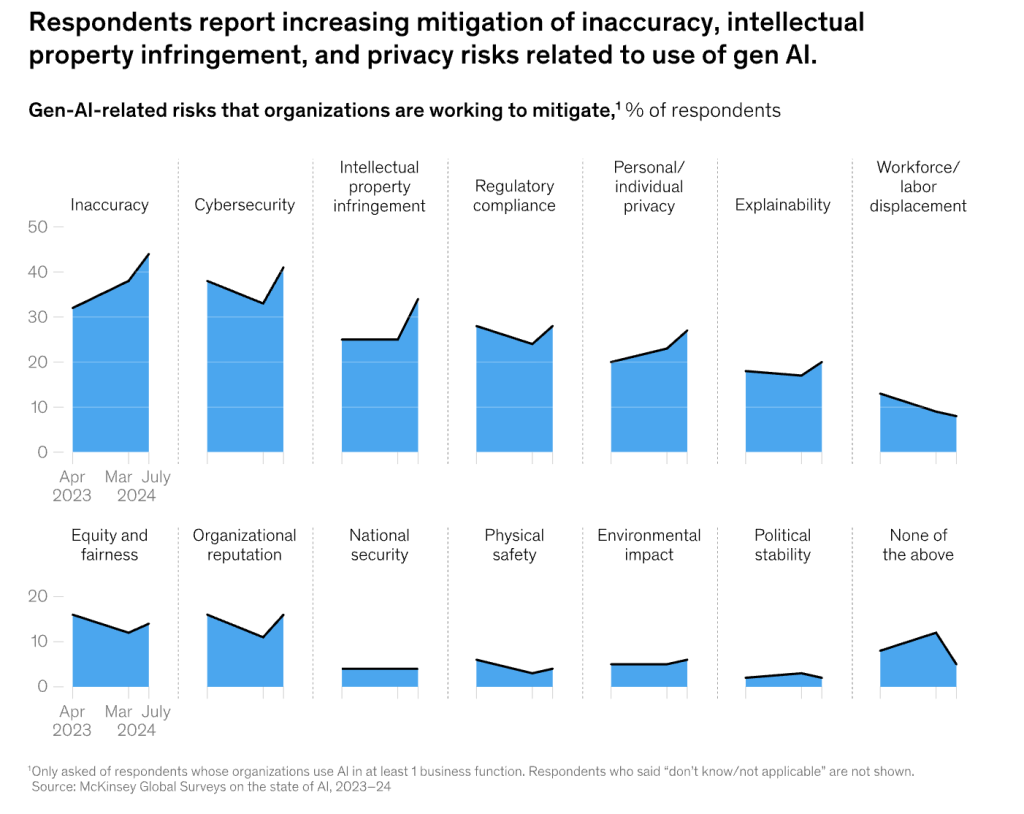

McKinsey research confirms the damage: poor data quality not only affects performance but also actively destroys trust in AI systems. And once trust is broken, no amount of patching can fix it. The worst part? Tech giants know this, and they hide behind “proprietary data records” while their models exhaust them. It’s not only negligent – it’s a fraud. And regulatory authorities, companies, and users are finally waking up to this reality.

Source: Exactly

Blockchain: The Missing Layer of Trust for AI Data

Here, blockchain stops being a buzzword and starts being a solution. Emerging decentralized data marketplaces now enable something that was previously impossible: cryptographically verified data streams for AI training in real-time. Projects at the forefront of this shift are already showing how it works: on-chain data pumps that filter out noise, check, and ensure that only high-quality inputs reach AI models. This isn’t just about transparency – it’s about building AI that can be trusted by design.

Each data record has an unalterable lineage – source, changes, and validation history – and enables companies to examine AI decisions like financial transactions. Imagine every data point used to train an AI agent having a permanent, tamper-proof record of its origin, changes, and validation history. Suddenly, this black box becomes transparent. You can trace a decision back to any data point, check the credibility of sources, and identify potential error points.

Real-World Implementations: Building Trust

The skeptics are wrong, and this isn’t just theory – blockchain-verified AI is already working in several industries, and decentralized data ecosystems are changing the way AI systems function. These implementations aren’t prototypes – they’re solving real business problems today. In global supply chains, companies use blockchain to verify the authenticity of data flowing into their AI logistics systems. Each supplier update, inventory change, and shipping status is cryptographically signed and stored on-chain. If problems arise, managers can trace issues back to their exact origin.

Source: McKinsey

The Dawn of Trust-Native AI Architecture

This concept will continue to shape future projects by building so-called trust-native AI systems. These architectures don’t just add review as an afterthought – they bring data provenance and authenticity into their fundamental design. This represents a paradigm shift in AI development. If earlier generations of AI metrics prioritized sophistication, next-generation systems will optimize for:

- Economicity: tracing every decision back to its source

- Transparency: no more black boxes

- Checkability: cryptographically enforced truth

The resulting AI agents may lack some of the flash of their predecessors, but they offer something much more valuable: reliability that can withstand the scrutiny of the enterprise level.

Why This Matters for Business Leaders

For managers grappling with the challenges of AI implementation, this technological development offers a clear way forward. Companies that recognize and act on this shift will achieve significant competitive advantages:

- Regulatory readiness: future-proof systems against upcoming transparency requirements

- Risk reduction: dramatically reduce errors due to poor data

- Stakeholder trust: build trust among customers, partners, and regulatory authorities

The most important thing is that these solutions don’t salvage companies’ AI investments instead of scrapping them. Instead of duplicating failed projects, companies can rebuild with verifiable data.

The Trust Revolution in AI Is Just Beginning

We’re at a turning point. The AI industry can